Mapping IAEA Verification Tools to International AI Governance: A Mechanism-by-Mechanism Analysis

-

Guest Post

-

AI Governance

-

Institutional Design

The International Atomic Energy Agency (IAEA) is the United Nations’ “nuclear watchdog.” It safeguards nuclear technology to ensure it is not diverted for military purposes, develops nuclear safety standards, and supports the peaceful use of nuclear energy for civilian purposes. As advanced AI systems develop capabilities with serious security, economic, and social implications, parallels to the nuclear field have been drawn with the idea of creating an “IAEA for AI” – an international organization dedicated to overseeing AI development. UN Secretary General António Guterres endorsed this concept in June 2023; Simon Institute experts have suggested that it could be one stepping stone toward an agile regime complex for AI. In addition, Google DeepMind co-founder Demis Hassabis has expressed support for an IAEA equivalent for AI.

The AI-nuclear parallel has its limits, for example, in the different roles of governments and commercial actors developing the technology, the relative ease of AI development compared with nuclear technology, and in the enforceability of verification measures. Rather than argue for or against an “IAEA for AI” institutionally, this research (and newly-published full report) looks at one aspect of IAEA safeguards. It conducts a systematic analysis of IAEA verification tools used within the Department of Safeguards for State nuclear program evaluations, assessing each for potential applicability to AI-related verification. The IAEA nuclear safeguards regime has shown significant success in the monitoring of nuclear technology development worldwide, and this study offers a framework and potential toolkit that stakeholders can use to design future multilateral verification systems for AI.

Why verification tools matter for international AI governance

Verification is the process used to confirm whether actors are complying with agreed-upon rules or obligations. In an advanced AI governance context, verification can enhance trust between developers and other stakeholders, serve as an early warning system for dangerous activity, potentially detect diversion of high-risk hardware, and provide a foundation for future international agreements. Without verification, the field of AI safety risks strain from mistrust and unregulated development of systems potentially vulnerable to misuse.

AI-focused verification may take many forms, and could involve establishing evidence-based processes to confirm whether an advanced AI developer is meeting standards for development or dissemination. Existing literature discusses at length the challenges and opportunities for implementing verification measures at both the institutional and technical levels, exploring approaches such as the use of tamper-proof AI chips in data centers, human inspectors for on-site evaluations, and cryptographic signatures for data authentication. This research contributes to these discussions, identifying additional tools and frameworks drawn from the IAEA’s experience and exploring whether lessons from the nuclear safeguards State evaluation toolkit can inform the design of trustworthy verification mechanisms for advanced AI.

Overview of IAEA verification tools

The IAEA’s Department of Safeguards is responsible for assessing whether a State has adhered to its international nuclear safeguards-related obligations, ensuring that nuclear technology and material are being used only for civilian purposes and are not being diverted for nuclear weapons programs. This ongoing process of safeguards-related information analysis and the drawing of relevant conclusions is known as State evaluation. State evaluation is conducted using three main information streams:

- State-provided information is the information that a State – either voluntarily or by obligation – reports to the IAEA.

- Information from safeguards activities is data collected by the IAEA itself, generally from inspections or other in-field activities.

- Other relevant information includes additional uncategorized information from open sources, IAEA databases, or information voluntarily provided by States about other States.

These data sources are used in tandem to draw conclusions about State nuclear programs on a roughly annual basis, determining whether a State is in compliance with its IAEA obligations. What makes the IAEA analytical system functional is this cross-corroboration of data: information is compared across the three streams, the built-in redundancy and layering providing a unique method of ensuring information accuracy.

This research provides a comprehensive overview of a total of 24 specific verification tools used within these three categories. Appendix A describes each IAEA verification tool and assesses its potential applicability to AI, addressing the technical specifications and challenges of implementation.

Examples of IAEA verification tools applied to AI governance

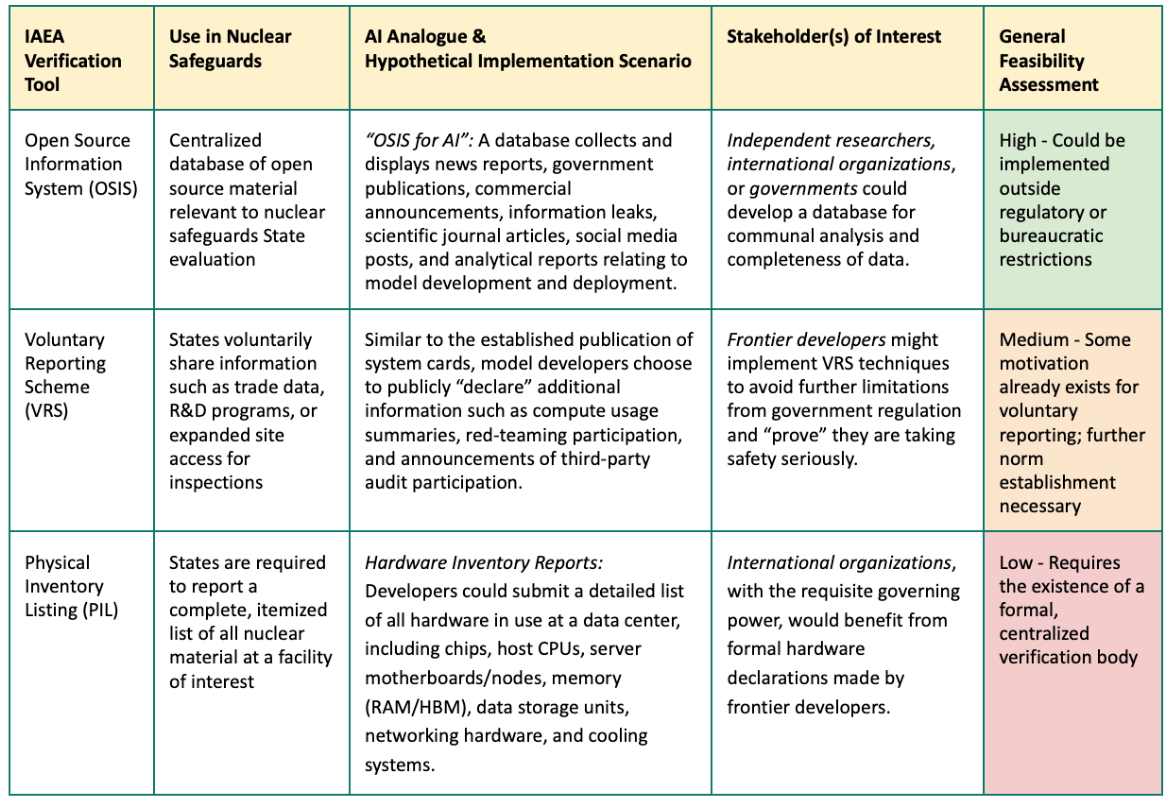

Below are examples of three IAEA verification tools that show different levels of promise in an AI governance context. The applicability and feasibility (high, medium and low) of these tools for advanced AI model verification is also assessed.

Table 1: Overview of Three Selected IAEA Verification Tools Mapped to AI Governance

One tool of particular applicability to the AI governance field is the IAEA’s Open Source Information System (OSIS). The Open Source team within the IAEA Department of Safeguards utilizes OSIS, an internally-developed and centralized database, to automate the collection and processing of open source information. A selection of curated keywords related to the nuclear fuel cycle and relevant technologies and materials is used for web scraping: OSIS automatically retrieves news reports, government publications, commercial announcements, social media entries, blog posts, analytical reports, and scientific journal articles of safeguards relevance. In addition, OSIS can collect non-textual media such as photos, videos, or audio data.

A database for AI-relevant open source information could be a feasible step for AI governance actors, whether governments, multilateral institutions or researchers. A centralized repository of AI-focused open source information, made public, could consist of advanced model release announcements, research publications, corporate disclosures, research collaborations, conference proceedings, social media activity, patent filings, and shipping information on compute hardware. Tags in this database could include country of interest, company, specific AI model of interest, and relevant hardware.

An “OSIS for AI” could be an attractive short-term step for a variety of reasons: First, creating a publicly accessible database does not require state or AI developer consent to be implemented. With proper funding, it could be created by individual researchers or institutions quickly and independently. This tool also utilizes publicly-available information and sidesteps any issues of access or sovereignty. It is a low-barrier entry to verification work, and, if made public, would make governance research more streamlined and accessible for the wider AI community. This would be a credible, independent layer of societal verification operating outside the scope of formal institutions, following on the heels of existing societal verification work in the nuclear sphere. Publicly-facing organizations already regularly conduct analyses and centralize nuclear-related data (i.e. the Federation of American Scientists Nuclear Notebook), offering additional precedent for the development of AI-focused societal verification work.

Three inspirations for international AI governance

The International Atomic Energy Agency (IAEA) provides a mature, technical precedent for nuclear-focused, multilateral verification. While no international regulatory body for AI currently exists, understanding the existing international nuclear safeguards regime at a granular, technical level can inform and potentially guide examination of options for future international AI verification. The IAEA experience demonstrates the contribution that a multilevel system of carefully designed and consistently applied verification tools can make to the establishment of a robust international governance regime.

Three broader lessons from IAEA’s verification system and practice stand out for their potential applicability and feasibility for advanced AI governance:

- Create an “Open Source Information System (OSIS) for AI.” This is one of the strongest candidates for a short-term and high-feasibility verification tool that could add value to AI governance. An independent AI research organization or consortia of research institutions, with the proper resources could develop an OSIS focusing on collecting, tagging, and otherwise centralizing all open source information related to model updates or compute-related milestones. While coverage would be unavoidably incomplete, its creation could improve situational awareness, help identify trends in model release best practices, and improve reporting on safety and security incidents.

- Establish a global verification research initiative. Several initiatives within the UN and the wider multilateral system already focus on AI safety and ethics. However, none is focused specifically on AI verification. A new verification-centric initiative could be launched within the architecture of one of these existing programs. This entity could also work to develop formal standards for verifying advanced AI model development.

- Promote overlapping information streams for verification. Following the IAEA’s three-information-stream model for data collection and analysis, multilateral AI governance deliberations could consider options for verification using three categories of information: open sources, voluntary disclosures, and third-party activities such as measurements, inspections, or compute monitoring. This approach provides redundancy and greater confidence as information can be corroborated across the different streams.

Relevant Links:

Acknowledgements:

Christina Krawec is an international security professional with prior experience working at the International Atomic Energy Agency conducting open source analysis for State evaluation. This research was supported by the ERA Fellowship and mentored by Kevin Kohler and Belinda Cleeland. The views expressed in this report are those of the author and do not reflect the official policy or position of the Simon Institute. The author would like to thank the ERA Fellowship for its financial and intellectual support.